Machine Learning is revolutionizing industries and transforming our world. This exploration delves into the core concepts, applications, and future of this powerful technology. From its foundational principles to practical implementations, we’ll uncover how machine learning is driving innovation and shaping the future.

This overview provides a comprehensive understanding of machine learning, exploring its various types, algorithms, and applications across diverse sectors. We’ll examine how machine learning is being used in healthcare, finance, and transportation, showcasing its real-world impact. The discussion also includes ethical considerations and future trends, highlighting the evolving nature of this transformative technology.

Introduction to Machine Learning

Machine learning (ML) is a branch of artificial intelligence that allows software applications to become more accurate in predicting outcomes without being explicitly programmed. Instead of relying on hard-coded rules, ML algorithms learn from data, identifying patterns and making predictions or decisions. This capability empowers systems to adapt and improve over time based on the data they encounter.Machine learning has revolutionized various fields, from healthcare to finance, by enabling systems to automate tasks, analyze complex data, and uncover insights that would be difficult or impossible for humans to identify.

This ability to learn and adapt from data forms the bedrock of many modern applications.

Core Concepts of Machine Learning

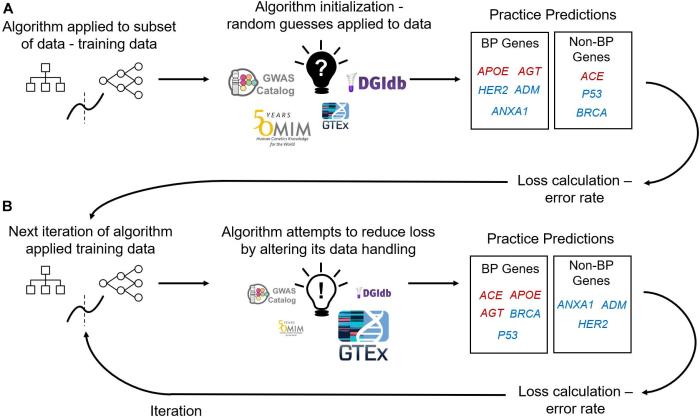

Machine learning algorithms use mathematical models to learn from data. These models are trained on a dataset, which is a collection of examples. The algorithms identify patterns and relationships in the data and use this knowledge to make predictions or decisions on new, unseen data. This iterative process of learning and improving is a hallmark of machine learning.

Types of Machine Learning

Machine learning algorithms are broadly categorized into three main types: supervised, unsupervised, and reinforcement learning. Understanding the differences between these types is crucial for selecting the appropriate approach for a given task.

Supervised Learning

Supervised learning algorithms learn from labeled data, where each data point is associated with a known output or target variable. The algorithm learns the relationship between the input features and the output variable, allowing it to predict the output for new, unseen input data. This type of learning is akin to learning from examples with correct answers.

Unsupervised Learning

Unsupervised learning algorithms learn from unlabeled data, where no target variable is associated with the data points. The algorithms discover hidden patterns, structures, and relationships within the data. This type of learning is valuable for tasks like clustering, dimensionality reduction, and association rule mining, where the goal is to find hidden patterns in the data.

Reinforcement Learning

Reinforcement learning algorithms learn through trial and error. The algorithm interacts with an environment, taking actions and receiving rewards or penalties based on the outcome of those actions. The goal is to learn a policy that maximizes the cumulative reward over time. Think of it like learning through a reward-and-punishment system.

Comparison of Machine Learning Types

| Type | Description | Example |

|---|---|---|

| Supervised | Learns from labeled data to predict outcomes. | Predicting house prices based on features like size and location, given historical data with corresponding prices. |

| Unsupervised | Discovers hidden patterns in unlabeled data. | Grouping customers with similar purchasing habits into different segments for targeted marketing. |

| Reinforcement | Learns through trial and error by interacting with an environment. | Training a robot to navigate a maze by rewarding it for reaching the goal and penalizing it for collisions. |

Applications of Machine Learning

Machine learning, a subset of artificial intelligence, is rapidly transforming various industries by automating tasks, improving decision-making, and enabling unprecedented insights from data. Its ability to identify patterns and make predictions is driving innovation across sectors, from healthcare to finance and beyond. This section delves into specific applications and the impact of machine learning on society.

Real-World Applications of Machine Learning

Machine learning is no longer a futuristic concept; its applications are pervasive in our daily lives. From recommending products on online platforms to detecting fraudulent transactions, machine learning algorithms are quietly shaping our interactions and experiences. This section explores five significant real-world applications.

- Product Recommendations: Online retail platforms leverage machine learning algorithms to understand customer preferences and tailor product recommendations. These algorithms analyze browsing history, purchase patterns, and demographics to suggest relevant items, increasing customer engagement and sales. For example, Amazon uses sophisticated recommendation systems to suggest products to customers, significantly improving the shopping experience and driving sales.

- Fraud Detection: Financial institutions employ machine learning to identify fraudulent transactions in real-time. By analyzing transaction data, including amount, location, and time, these algorithms can flag suspicious activities and prevent financial losses. This is crucial in combating online fraud and protecting customers from unauthorized charges.

- Medical Diagnosis: Machine learning models can analyze medical images (X-rays, CT scans) and patient data to assist in diagnosing diseases. These models can identify patterns and anomalies that may be missed by human clinicians, leading to earlier and more accurate diagnoses. For example, machine learning algorithms are used to detect cancerous tumors in mammograms with higher accuracy than traditional methods, potentially saving lives.

- Personalized Education: Machine learning algorithms can adapt to individual student needs, providing personalized learning experiences. By analyzing student performance, these systems can identify knowledge gaps and adjust the curriculum accordingly, leading to improved learning outcomes. This allows educators to tailor instruction to individual needs and enhance student engagement and learning.

- Autonomous Vehicles: Machine learning is fundamental to the development of self-driving cars. These vehicles use algorithms to perceive their environment, make decisions, and navigate safely. Algorithms analyze sensor data, such as camera images and radar signals, to interpret the surrounding environment and plan appropriate actions.

Transformation of Industries by Machine Learning

Machine learning is rapidly transforming various industries, automating processes, improving efficiency, and enabling new revenue streams. Its ability to analyze vast datasets allows for unprecedented insights into customer behavior, market trends, and operational processes.

- Healthcare: Machine learning is revolutionizing healthcare by enabling faster diagnoses, personalized treatment plans, and drug discovery. These applications improve patient outcomes and reduce healthcare costs. For example, machine learning algorithms are used to predict patient readmission risks, allowing hospitals to proactively intervene and improve patient care.

- Finance: Machine learning is enhancing financial services by automating tasks, detecting fraud, and managing risk. Algorithms analyze vast amounts of financial data to identify patterns and predict market trends, enabling better investment decisions and risk management strategies. For example, machine learning algorithms are used to assess creditworthiness, minimizing the risk of loan defaults.

- Transportation: Machine learning is transforming transportation by optimizing logistics, improving traffic flow, and enhancing safety. Algorithms can predict traffic patterns, optimize delivery routes, and improve safety features in vehicles, ultimately increasing efficiency and reducing costs. For example, machine learning is used in logistics to optimize delivery routes, reducing fuel consumption and delivery times.

Impact of Machine Learning on Society

The widespread adoption of machine learning has profound implications for society. It can improve efficiency, reduce costs, and enhance safety across numerous sectors. However, careful consideration of ethical implications is paramount to ensure responsible development and deployment.

Algorithms in Machine Learning

Machine learning algorithms are the core of any machine learning system. They dictate how the system learns from data and makes predictions or decisions. Understanding the various types and their characteristics is crucial for selecting the right algorithm for a specific task. Different algorithms excel in different scenarios, and choosing the appropriate one significantly impacts the accuracy and efficiency of the machine learning model.

Common Machine Learning Algorithms

A wide array of algorithms are employed in machine learning, broadly categorized into supervised and unsupervised learning. Each category tackles distinct learning tasks, using different approaches to find patterns and relationships within data. The selection of an algorithm depends heavily on the nature of the data and the desired outcome.

Machine learning is pretty cool, right? It’s already being used in some surprising places, like analyzing the intricate designs of gold jewelry. Companies are using ML to identify patterns in the quality and style of gold jewelry and predict market trends. This kind of data analysis is just one more way machine learning is shaping the future.

Supervised Learning Algorithms

Supervised learning algorithms learn from labeled data, where each data point is associated with a known output or target variable. These algorithms aim to model the relationship between the input features and the target variable, allowing them to predict the output for new, unseen data.

- Linear Regression: This algorithm establishes a linear relationship between the input variables and the target variable. It’s a fundamental algorithm, often used for predicting continuous values. For instance, predicting house prices based on size and location falls under this category. The formula for linear regression is y = mx + b, where y is the dependent variable, x is the independent variable, m is the slope, and b is the y-intercept.

A key strength is its simplicity and interpretability. However, it struggles with complex non-linear relationships.

- Logistic Regression: Used for predicting categorical outcomes, logistic regression models the probability of a data point belonging to a particular class. For example, classifying emails as spam or not spam, or determining whether a customer will click on an advertisement, are common applications. The algorithm uses a sigmoid function to map the linear combination of input features to a probability between 0 and 1.

A significant advantage is its efficiency and ability to handle large datasets. However, it assumes a linear relationship between the input variables and the outcome, which might not hold true in all cases.

Unsupervised Learning Algorithms

Unsupervised learning algorithms learn from unlabeled data, seeking patterns and structures within the data without predefined categories. These algorithms aim to discover hidden relationships and group similar data points together.

- K-means Clustering: This algorithm groups data points into k clusters based on their similarity. The algorithm iteratively assigns data points to the nearest cluster centroid, aiming to minimize the within-cluster variance. An example application is customer segmentation, where customers are grouped based on purchasing behavior or demographics. The algorithm’s strength lies in its simplicity and efficiency. However, the choice of k (the number of clusters) can be subjective and affect the results.

The algorithm’s sensitivity to initial centroid placement can also be a weakness.

- Hierarchical Clustering: This algorithm creates a hierarchical tree-like structure of clusters. It can be agglomerative (starting with individual data points and merging them into clusters) or divisive (starting with a single cluster and recursively dividing it into smaller clusters). Hierarchical clustering is useful in identifying the relationships between different data points. A strength is its ability to reveal the hierarchy and structure within the data.

However, it can be computationally expensive for large datasets, and the interpretation of the dendrogram (tree diagram) can be complex.

Comparison of Machine Learning Algorithms

The choice of a machine learning algorithm depends on the specific task and the characteristics of the data. Different algorithms possess varying strengths and weaknesses.

| Algorithm | Type | Strengths | Weaknesses |

|---|---|---|---|

| Linear Regression | Supervised | Simple, interpretable, efficient | Assumes linear relationships, struggles with complex data |

| Logistic Regression | Supervised | Efficient, handles large datasets, predicts probabilities | Assumes linear relationships, might not capture non-linear patterns |

| K-means Clustering | Unsupervised | Simple, efficient, easy to implement | Choice of k is subjective, sensitive to initial centroid placement |

| Hierarchical Clustering | Unsupervised | Reveals hierarchical structure, identifies relationships | Computationally expensive for large datasets, interpretation can be complex |

Data Preprocessing for Machine Learning

Data preprocessing is a crucial step in the machine learning pipeline. Raw data often contains inconsistencies, errors, and irrelevant information that can negatively impact the performance of machine learning models. Effective preprocessing techniques improve data quality, enhance model accuracy, and ensure reliable predictions.Data preprocessing involves transforming raw data into a format suitable for machine learning algorithms. This transformation often includes cleaning, transforming, and reducing the data to create a dataset that is easier for the algorithm to understand and learn from.

By addressing issues like missing values, outliers, and irrelevant features, data preprocessing helps to prevent biased or inaccurate model outputs.

Importance of Data Preprocessing

Data preprocessing is essential for building effective machine learning models. Raw data frequently contains inconsistencies, errors, and irrelevant information that can skew results and lead to inaccurate predictions. By cleaning, transforming, and preparing the data, we can improve model accuracy, reduce bias, and ensure reliable predictions.

Data Cleaning Techniques

Data cleaning involves identifying and handling errors and inconsistencies within the dataset. This process includes removing duplicate entries, correcting typographical errors, and handling missing values. Thorough data cleaning ensures that the model is trained on accurate and reliable data.

- Handling Missing Values: Missing values can significantly impact model performance. Strategies include imputation (filling missing values with estimated values), deletion (removing rows with missing values), or using advanced techniques like K-Nearest Neighbors (KNN) imputation. The appropriate method depends on the nature of the missing data and the characteristics of the dataset. For example, if a large percentage of data is missing for a specific feature, removal might be a better choice than imputation.

Imputation, however, can be used when a smaller percentage of data is missing, or when the missing values are critical to the model’s outcome.

- Removing Outliers: Outliers are data points that deviate significantly from the rest of the data. They can negatively influence model performance and should be addressed through techniques like capping, winsorizing, or removal, depending on the context. For example, if an outlier represents a data entry error, it can be removed. If the outlier represents a valid, though unusual, data point, it might be better to keep it and potentially adjust the model to account for its presence.

Feature Scaling Techniques

Feature scaling standardizes the range of features, ensuring that features with larger values do not dominate the model’s learning process. Common techniques include standardization (converting data to have zero mean and unit variance) and normalization (scaling data to a specific range, like 0 to 1).

- Standardization: This method transforms data to have a mean of zero and a standard deviation of one. It is suitable for data with a Gaussian distribution. For example, in analyzing customer spending habits, standardization can ensure that features like age, income, and purchase frequency do not disproportionately influence the model.

- Normalization: This method scales data to a specific range, often between 0 and 1. It is useful when the distribution of the data is not Gaussian. Consider a dataset where features represent house prices. Normalization ensures that the price feature doesn’t overshadow other features.

Feature Engineering Techniques

Feature engineering involves creating new features from existing ones to improve model performance. This process can include combining existing features, creating interaction terms, or applying transformations like log transformations.

Machine learning is pretty cool, right? It’s all about algorithms learning from data, and you can use it for a whole bunch of things. For example, if you’re into astrology and zodiac sign jewelry, zodiac sign jewelry could be personalized based on someone’s astrological sign, leveraging machine learning to analyze vast amounts of data to predict trends and design unique pieces.

This kind of application shows how ML can be really useful and creative.

- Creating Interaction Terms: Combining two or more features to capture their joint effect can be crucial. For instance, in predicting house prices, combining features like area and location might reveal insights about price patterns.

- Applying Transformations: Transforming features, such as using logarithmic transformations, can improve linearity and model performance. For example, in analyzing population growth, applying a logarithmic transformation to population data can help to model growth rates more accurately.

Step-by-Step Data Preparation Guide

- Data Collection: Gather relevant data from reliable sources.

- Data Exploration: Analyze the data to identify patterns, inconsistencies, and potential issues.

- Data Cleaning: Handle missing values, outliers, and inconsistencies.

- Feature Engineering: Create new features from existing ones to improve model performance.

- Feature Scaling: Standardize or normalize features to prevent features with larger values from dominating the model.

- Data Splitting: Divide the data into training, validation, and testing sets.

- Model Training: Train the machine learning model using the training data.

- Model Evaluation: Evaluate the model’s performance using the validation and testing data.

Model Evaluation and Selection

Evaluating and selecting the best machine learning model is crucial for deploying effective solutions. A poorly evaluated model can lead to inaccurate predictions and wasted resources. Thorough assessment ensures the chosen model aligns with the specific needs of the task, maximizing its potential and minimizing errors. This process involves careful consideration of various metrics and techniques, enabling data scientists to make informed decisions about model performance.Model evaluation is not a simple task.

It requires a deep understanding of the model’s strengths and weaknesses, the characteristics of the data, and the specific objectives of the task. The selection process considers not only accuracy but also factors like computational cost, interpretability, and robustness.

Significance of Model Evaluation

Model evaluation is vital for ensuring the effectiveness of a machine learning model in real-world applications. A well-evaluated model demonstrates a clear understanding of the data, predicts outcomes accurately, and can be trusted to provide reliable insights. Without proper evaluation, models might produce misleading results or fail to meet the desired performance standards. This leads to unreliable conclusions and wasted efforts.

Metrics for Evaluating Model Performance

Various metrics are used to assess the performance of machine learning models. These metrics provide insights into different aspects of model behavior.

- Accuracy measures the proportion of correctly classified instances. It’s a simple metric, but its usefulness depends on the class distribution. In cases with imbalanced classes, high accuracy might not reflect the model’s ability to predict the minority class effectively. For example, in medical diagnosis where a rare disease is being predicted, high accuracy might not reflect the ability to accurately predict the rare disease.

- Precision, on the other hand, indicates the accuracy of positive predictions. It’s particularly relevant when the cost of false positives is high. For example, in spam filtering, a high precision ensures that few legitimate emails are incorrectly classified as spam. Precision is calculated as the number of true positives divided by the total number of predicted positives.

- Recall measures the proportion of actual positive instances that were correctly identified. It’s important when the cost of false negatives is high. In medical diagnosis, a high recall ensures that most patients with the disease are correctly identified. Recall is calculated as the number of true positives divided by the total number of actual positives.

Model Selection Methods

Selecting the best model involves comparing different models on the same dataset. Cross-validation techniques are commonly used to evaluate a model’s performance on unseen data. This method involves splitting the data into training and testing sets. Techniques like k-fold cross-validation divide the data into k subsets, train the model on k-1 subsets, and test on the remaining subset.

This process is repeated for each subset, providing a robust estimate of the model’s performance. This is a crucial aspect of model selection, as it provides an unbiased evaluation of the model’s generalization ability.

Overfitting and Underfitting

Overfitting occurs when a model learns the training data too well, including noise and irrelevant details. This leads to poor generalization performance on unseen data. Conversely, underfitting occurs when a model is too simple to capture the underlying patterns in the data. This also results in poor generalization. A good model will balance complexity with the need for generalization.

Overfitting is a common problem in machine learning, as models can become overly complex, resulting in inaccurate predictions on new data. A model with high variance is susceptible to overfitting. Underfitting, on the other hand, occurs when the model is too simple to capture the underlying trends in the data. A model with high bias is prone to underfitting.

Finding the sweet spot between these two extremes is crucial for optimal performance.

Model Evaluation Metrics Table

| Metric | Description | Interpretation |

|---|---|---|

| Accuracy | Proportion of correctly classified instances. | Higher is better, but consider class imbalance. |

| Precision | Accuracy of positive predictions. | High precision means few false positives. |

| Recall | Proportion of actual positives correctly identified. | High recall means few false negatives. |

Ethical Considerations in Machine Learning

Machine learning models, while powerful tools, can perpetuate and amplify existing societal biases if not developed and deployed responsibly. Understanding the ethical implications is crucial for ensuring fair and equitable outcomes. This section explores the potential pitfalls and strategies for mitigating them.Machine learning algorithms, trained on data reflecting societal biases, can inadvertently produce biased outputs. This raises concerns about fairness, transparency, and accountability.

Addressing these ethical considerations requires a careful examination of data sources, algorithm design, and the potential impact on different groups.

Potential Ethical Challenges

The development and deployment of machine learning systems present a range of ethical challenges. These range from concerns about data privacy and security to issues of accountability and transparency. Understanding these challenges is essential for responsible development and deployment.

- Data Bias: Machine learning models are trained on data, and if this data reflects existing societal biases, the model will likely perpetuate those biases. For instance, if a hiring algorithm is trained on historical data that shows a preference for male candidates, it might unfairly discriminate against female candidates.

- Lack of Transparency: Many machine learning models, particularly complex deep learning models, are “black boxes.” Understanding how these models arrive at their predictions can be difficult, making it hard to identify and correct errors or biases. This lack of transparency can also make it challenging to hold anyone accountable for outcomes.

- Algorithmic Discrimination: Machine learning models can inadvertently discriminate against certain groups based on protected characteristics like race, gender, or religion. For example, a loan application model might unfairly deny loans to applicants from specific demographic groups.

- Privacy Concerns: Machine learning models often require access to sensitive personal data, raising concerns about data privacy and security. For example, facial recognition technology used for surveillance raises concerns about potential misuse and abuse.

Bias in Machine Learning Algorithms

Machine learning algorithms can inherit and amplify biases present in the training data. This can lead to unfair or discriminatory outcomes. Identifying and mitigating these biases is crucial for ensuring fairness.

- Identifying Bias in Data: A critical step is to thoroughly examine the training data for potential biases. This involves looking for imbalances in representation of different groups, examining the distribution of data points, and checking for correlations between features and protected characteristics.

- Addressing Bias in Algorithms: Various techniques can be employed to mitigate bias in algorithms. These include re-weighting data points to address imbalances, using adversarial training to minimize bias, and incorporating fairness constraints into the optimization process.

- Impact of Bias: Biased algorithms can have significant impacts on individuals and society. For example, biased loan application models can lead to unequal access to financial resources, and biased criminal justice risk assessment tools can lead to disproportionate incarceration of certain groups.

Responsible Development and Deployment

Developing and deploying machine learning systems responsibly requires careful consideration of ethical implications and potential biases. This includes clear guidelines, robust testing, and ongoing monitoring.

- Ethical Guidelines: Organizations should establish clear ethical guidelines for the development and deployment of machine learning systems. These guidelines should address data privacy, fairness, transparency, and accountability.

- Robust Testing: Rigorous testing and validation of machine learning models are essential to identify and mitigate potential biases. This includes testing across different demographic groups and diverse data sets.

- Ongoing Monitoring: Continuous monitoring of machine learning models in production is crucial to detect and address any emerging biases or unintended consequences. This involves tracking performance metrics, analyzing user feedback, and identifying areas for improvement.

Ethical Dilemmas in Applications

Real-world applications of machine learning present various ethical dilemmas. Understanding these dilemmas is crucial for navigating the complexities of responsible deployment.

- Facial Recognition: Facial recognition technology, while potentially useful for security, raises concerns about privacy violations and potential misuse. The accuracy and reliability of facial recognition in different demographics are also problematic.

- Predictive Policing: Predictive policing models, designed to predict crime hotspots, can lead to disproportionate targeting of certain communities. This raises concerns about racial profiling and bias.

Methods to Mitigate Ethical Issues

Several methods can be employed to mitigate the potential ethical issues associated with machine learning. These involve careful data selection, algorithm design, and continuous monitoring.

- Data Auditing: Regular audits of training data can help identify and address potential biases. This involves careful analysis of data sources, looking for imbalances in representation, and examining correlations between features and protected characteristics.

- Algorithm Transparency: Developing more transparent algorithms that provide insights into their decision-making processes is crucial. This helps identify and address potential biases and improve accountability.

- Human Oversight: Integrating human oversight into the development and deployment process can help mitigate the risk of biased or unfair outcomes. This includes involving diverse perspectives in the design and evaluation of models.

Future Trends in Machine Learning

Machine learning is rapidly evolving, driven by advancements in computing power, data availability, and algorithmic innovation. This evolution is poised to reshape numerous industries and aspects of daily life. The future of machine learning promises increasingly sophisticated capabilities, impacting everything from healthcare diagnostics to personalized recommendations.

Emerging Trends in Machine Learning

Several key trends are shaping the future of machine learning. These include the increasing use of explainable AI, the development of more robust and efficient algorithms, and the growing integration of machine learning with other technologies. This trend is characterized by a move towards more interpretable and trustworthy AI systems.

Advancements in Deep Learning and Neural Networks

Deep learning models, particularly neural networks, are experiencing significant advancements. These improvements encompass more complex architectures, optimized training techniques, and the incorporation of transfer learning. The enhanced capabilities of deep learning are driving progress in areas such as image recognition, natural language processing, and speech synthesis. For instance, recent advancements in convolutional neural networks (CNNs) have led to significant improvements in image classification accuracy.

Impact of Cloud Computing on Machine Learning

Cloud computing plays a crucial role in facilitating machine learning deployments. Cloud platforms provide scalable resources for training and deploying machine learning models, making these processes more accessible and cost-effective for organizations of all sizes. This accessibility allows for the processing of large datasets and the development of more complex models that would otherwise be computationally infeasible. The availability of cloud-based machine learning tools and services has democratized access to advanced AI capabilities.

Role of Machine Learning in Emerging Technologies

Machine learning is increasingly intertwined with emerging technologies like the Internet of Things (IoT), robotics, and autonomous vehicles. In IoT, machine learning algorithms can analyze data from interconnected devices to optimize energy consumption, predict equipment failures, and enhance overall efficiency. In robotics, machine learning enables robots to adapt to dynamic environments and perform complex tasks with greater precision.

Autonomous vehicles rely heavily on machine learning for object recognition, path planning, and decision-making.

Predictions for the Future of Machine Learning

The future of machine learning is characterized by a focus on greater personalization and customization. Machine learning models will be designed to tailor their outputs to individual users’ needs and preferences. Examples include personalized education platforms and customized healthcare recommendations. Furthermore, the field will likely see a greater emphasis on explainable AI, ensuring that machine learning models are not only accurate but also understandable.

Finally, the development of more robust and efficient algorithms will likely continue, enabling faster processing and more accurate results.

Machine Learning Libraries and Tools

Machine learning has become increasingly accessible due to the availability of powerful libraries and tools. These resources streamline the development process, allowing practitioners to focus on model design and application rather than intricate implementation details. Choosing the right library often depends on specific project needs and the desired outcome.

Popular Machine Learning Libraries

Several popular libraries offer a wide range of functionalities for machine learning tasks. These libraries provide pre-built algorithms, optimized for performance, and extensive documentation. They greatly simplify the process of building and deploying machine learning models.

- TensorFlow: Developed by Google, TensorFlow is a comprehensive open-source library. It’s highly flexible, supporting various machine learning models, including deep learning architectures. Its versatility makes it suitable for diverse applications, such as image recognition, natural language processing, and time series analysis.

- PyTorch: Another popular open-source library, PyTorch, is known for its dynamic computation graph. This characteristic allows for greater flexibility in model design, making it an attractive choice for researchers and developers working on cutting-edge projects. Its user-friendly interface and intuitive API contribute to its widespread adoption.

- scikit-learn: Scikit-learn is a robust library focused on classical machine learning algorithms. It provides a wide array of tools for tasks like classification, regression, clustering, and dimensionality reduction. Its strengths lie in its simplicity and efficiency for standard machine learning models.

Benefits of Using Machine Learning Libraries

Leveraging machine learning libraries brings several advantages. These include significant time savings, improved code readability, and access to optimized algorithms. The pre-built functions often come with extensive documentation and support, minimizing development time and enhancing the overall project efficiency.

- Reduced Development Time: Pre-built algorithms and functions significantly reduce the time required for implementing machine learning models.

- Enhanced Code Readability: Well-structured libraries promote cleaner and more readable code, which improves maintainability and collaboration.

- Optimized Algorithms: Libraries often incorporate highly optimized algorithms for faster processing and greater efficiency.

- Extensive Documentation and Support: The availability of comprehensive documentation and active communities provides ample resources for resolving issues and learning best practices.

Building a Simple Machine Learning Model

Using these libraries, creating a simple machine learning model becomes straightforward. The following example demonstrates a basic classification task using scikit-learn.“`pythonfrom sklearn.datasets import load_irisfrom sklearn.model_selection import train_test_splitfrom sklearn.linear_model import LogisticRegression# Load the iris datasetiris = load_iris()X, y = iris.data, iris.target# Split the data into training and testing setsX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)# Initialize and train a logistic regression modelmodel = LogisticRegression(max_iter=1000)model.fit(X_train, y_train)# Make predictions on the test sety_pred = model.predict(X_test)“`This example demonstrates the basic steps for loading data, splitting it, training a model, and making predictions.

The process is largely similar across different libraries, with variations in the specific API calls.

Installation and Setup Procedures

Installing and setting up machine learning tools often involves using package managers like pip. Specific installation procedures vary depending on the operating system and the chosen libraries.

- Using pip: The `pip` package manager is commonly used to install machine learning libraries. To install TensorFlow, use the command `pip install tensorflow`.

- Environment Management: Using virtual environments is highly recommended to isolate dependencies for different projects.

- Checking Dependencies: Verify the installed libraries are compatible with the operating system and other software components. Use `pip freeze` to check installed packages.

Comparison of Machine Learning Libraries

The table below provides a comparative overview of popular machine learning libraries, highlighting their strengths and weaknesses.

| Library | Strengths | Weaknesses |

|---|---|---|

| TensorFlow | Extensive deep learning capabilities, highly optimized for performance, vast community support. | Steeper learning curve compared to scikit-learn, can be complex for simpler tasks. |

| PyTorch | Dynamic computation graph for flexibility in model design, user-friendly API, strong community support. | Potentially less optimized for performance compared to TensorFlow for some tasks. |

| scikit-learn | Simple and efficient for classical machine learning algorithms, easy to learn and use. | Limited deep learning capabilities, may not be suitable for very large datasets. |

Case Studies of Machine Learning Success

Machine learning is transforming industries, and numerous case studies demonstrate its power to solve complex problems and drive significant business value. These examples showcase how leveraging machine learning algorithms can yield substantial improvements in efficiency, accuracy, and profitability. From streamlining manufacturing processes to personalizing customer experiences, machine learning is proving its worth in a variety of sectors.

E-commerce Recommendation Systems

Effective recommendation systems are crucial for online retailers. They personalize the shopping experience, suggesting products that customers might like, thereby increasing conversion rates and customer satisfaction. These systems learn from customer behavior, including browsing history, purchase history, and ratings. Sophisticated algorithms, such as collaborative filtering and content-based filtering, are used to predict the probability of a customer purchasing a specific product.

The process involves gathering data from customer interactions, training machine learning models, and deploying the models to generate recommendations. This iterative process ensures the recommendations remain relevant and engaging to the customer. For instance, Amazon’s recommendation engine significantly influences purchase decisions, driving substantial sales growth. The positive impact is evident in increased customer engagement and revenue generation.

Fraud Detection in Financial Institutions

Machine learning is effectively combating financial fraud. Banks and financial institutions leverage machine learning models to identify suspicious transactions and prevent losses. These models analyze vast amounts of transaction data, including details like location, time, and transaction amounts, to detect patterns indicative of fraudulent activities. A key aspect is the continuous monitoring and adaptation of the models to evolving fraud patterns.

For example, by analyzing transaction data, machine learning algorithms can identify anomalies and flag potentially fraudulent activities. The impact on financial institutions is substantial, leading to significant cost savings and improved security. The success of these systems is measured by the reduction in fraudulent activities and the prevention of financial losses. The business benefits include reduced operational costs, increased security, and enhanced customer trust.

Predictive Maintenance in Manufacturing

Machine learning is increasingly used in predictive maintenance. By analyzing sensor data from machinery, these systems can predict potential equipment failures before they occur. This proactive approach minimizes downtime, reduces repair costs, and enhances overall equipment effectiveness. The process involves collecting sensor data, training machine learning models to identify patterns associated with equipment failures, and deploying these models to predict future failures.

For instance, in manufacturing, if a machine is likely to malfunction, the predictive maintenance system alerts technicians, allowing them to perform preventative maintenance before a major breakdown occurs. The positive impact is substantial, with reduced downtime, lower maintenance costs, and improved overall operational efficiency. The business benefits include enhanced productivity, reduced operational costs, and increased equipment lifespan.

Last Recap

In conclusion, machine learning has emerged as a powerful force, reshaping industries and impacting our lives in profound ways. From its diverse applications to its potential future trends, this technology continues to evolve and adapt, promising exciting possibilities. We’ve covered the fundamentals, practical applications, and ethical considerations, providing a holistic perspective on this fascinating field.

FAQ Insights

What are some common misconceptions about Machine Learning?

Many people mistakenly believe that machine learning is solely about complex algorithms. While algorithms are crucial, machine learning also relies heavily on high-quality data. Without good data, even the most sophisticated algorithms can’t produce accurate results.

How much data is needed for Machine Learning?

The amount of data required depends on the complexity of the task and the chosen algorithm. Simple tasks might require less data, while complex ones need substantially more to train the model effectively.

What are the potential risks of using Machine Learning?

Potential risks include bias in data, which can lead to unfair or discriminatory outcomes. Additionally, the lack of transparency in some machine learning models can make it difficult to understand their decision-making processes.

What are the different types of Machine Learning?

Machine Learning encompasses various types, including supervised, unsupervised, and reinforcement learning. Supervised learning uses labeled data, unsupervised learning finds patterns in unlabeled data, and reinforcement learning involves training an agent to make decisions in an environment.